ANOVA, Tukey, and their non-parametric versions

One-way ANOVA

A parametric test designed to compare the means of two or more samples.

H0: the means of all samples are equal.

Assumptions:

- Normality (each sample is taken from a normally distributed population)

- Sample independence

- Variance equality, i.e. homoscedasticity (that the variance of data in the different groups should be the same)

(From my previous F test blog)

For ANOVA, F statistic is

F = (explained variance) / (unexplained variance), or

F = (between-group variability) / (within-group variability) .

In both cases, F takes a form of the sum of the square of differences between a set of values of interest and overall mean (which is conceptually related to variance or variability).

What would be two-way ANOVA??

Two-way ANOVA examines the effect of two factors on a dependent variable, and also examines whether the two factors affect each other to influence the continuous variable.

What’s ANOVA’s role in typical linear regression output?

http://www.stat.yale.edu/Courses/1997-98/101/anovareg.htm

It is about how well the model fits, from the perspective of variance. F statistic becomes large when the explanatory variable(s) explain the data well, such that the data groupings by the variable increases F statistic, i.e. how well the model fits (the model sum of square variance) with respect to the residual (mean square error).

R2 in the linear regression can be also described based on the products from ANOVA: r2 = SSM/SST; <model sum of square variance, how well the model fits> divided by <total sum of square variance>

In multiple linear regression, H0 in the F test is all coefficients are 0. H1 is at least one of them is non-zero.

Tukey’s range test (or Tukey’s post hoc test)

- It is like t-test.

- Normality, Sample independence, Variance equality, i.e. homoscedasticitythe same) should be met.

- Take a pairs of max mean and min mean across groups.

- Compute the statistic q_s = (max_mean - min_mean) / SE,

where SE = sqrt(MSw / n), MSw is the Mean Square Within (from ANOVA) and n is the number of samples in each group.

MSw = SS_within / df_within = SUM_k[(n-1) x (std.dev. for each group k)] / [# of total individuals - k] - Compare against a critical q value (a value from the q table with a specific significance level, “degrees of freedom Within” from ANOVA, and the number of groups)

- If the q_s is larger than the critical q value, the two means are significantly different at the significance level.

- The sample size needs to be the same across groups.

If not, use Tukey-Kramer test https://real-statistics.com/one-way-analysis-of-variance-anova/unplanned-comparisons/tukey-kramer-test/

which replaces 2/n with (1/n_i + 1/n_j)

So, whether running Tukey for all possible pairs or only some of them does not seem to affect Tukey’s results. So, there seems no point doing ANOVA before Tukey (this discussion agrees too https://www.reddit.com/r/AskStatistics/comments/xwmzy8/anova_why_bother_if_you_can_run_posthoc_ttests/ )

What to do when the data is not normally distributed

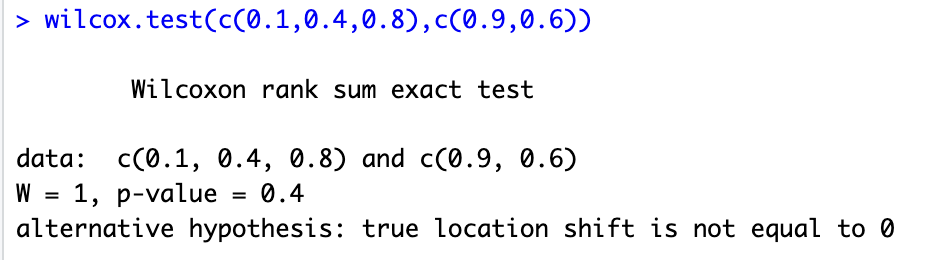

Kruskal-Wallis test is the non-parametric counterpart of ANOVA. https://en.wikipedia.org/wiki/Kruskal–Wallis_test “For analyzing the specific sample pairs for stochastic dominance, Dunn's test pairwise Mann–Whitney tests with Bonferroni correction, or the more powerful but less well known Conover–Iman test are sometimes used.”

Why ANOVA and Tukey not used in ggbetweenstats??

Welch's F test instead of ANOVA: https://rips-irsp.com/articles/10.5334/irsp.198

"Student’s t-test and classical F-test ANOVA rely on the assumptions that two or more samples are independent, and that independent and identically distributed residuals are normal and have equal variances between groups." ... "Under realistic deviations from the assumption of equal variances, the classic F-test can yield severely biased results and lead to invalid statistical inferences. We examine two common alternatives to the F-test, namely the Welch’s ANOVA (W-test) and the Brown-Forsythe test (F*-test). Our simulations show that under a range of realistic scenarios, the W-test is a better alternative and we therefore recommend using the W-test by default when comparing means."

Games-Howell instead of Tukey: Probably because of the feature explained in this blog https://oceanone.hatenablog.com/entry/2020/06/28/035710

It says that Games-Howell test is an alternative to Tukey-Kramer; while Tukey-Kramer requires homogenous variances, Games-Howell does not assume that. This makes Games-Howell applicable to wider cases, in the expense of statistical power when the variance in data is homogenous.

.png)